Research project

Music Cognition

Knowledge and culture subproject 1: "Music Cognition" of Leiden University Centre for Linguistics

- Duration

- 2013 - 2017

- Contact

- Johan Rooryck

- Funding

-

NWO Horizon grant

NWO Horizon grant

Music and language have a lot in common. Both are rule-governed and subject to recursion, the ability to indefinitely lengthen sentences or musical pieces. Infants acquire both music and language effortlessly, suggesting an innate ability. Predispositions for music are related to those abilities that distinguish humans from other animals and emerge prior to birth (Trehub 2003; Zentner & Kagan 1996).

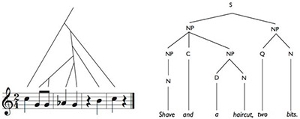

Developing earlier proposals by Lerdahl & Jackendoff (1983), the theoretical linguists Pesetsky & Katz (2009) argue, that music contains a syntactic component common to music and language: all formal differences between language and music derive from differences in their fundamental building blocks. This hypothesis finds some support from the neuroscience of music (Patel 2008).

In developmental psychology, research on music ability and activity in infants has yielded fascinating results as to which aspects of musical disposition are part of nature and which ones should be ascribed to nurture (see Trehub 2003 for an overview).

Infants’ perception of consonant intervals is more precise than their perception of dissonant intervals (Schellenberg & Trehub 1996), suggesting that a preference for consonance is innate. Hannon & Trehub (2005) were able to show that 6-month-old infants can recognize rhythmic perturbations in rhythmically complex Bulgarian music, while their North-American parents have (apparently) become insensitive to these differences. And lastly, beat induction, a human-specific and domain-specific skill, is already functional right after birth (Winkler et al. 2009). These results count as emerging evidence for a human predisposition for music.

Finally, it is important to point out that these predispositions for consonance, melody and intonation, rhythm, and beat induction are independent of the development of language. They may rather be seen as dependent on language-independent core knowledge systems, such as the systems for number and geometry. At the same time, language encapsulates musical elements such as intonation and metrics, which will be the topic of the research theme on Poetry, rhythm, and meter.

Postdoc Project (Paula Roncaglia-Denissen): What is shared (and what is unique) in music and language

For the implementation of this subproject, see Paula Roncaglia-Denissen's page.

The Postdoc project will investigate the nature of the relationship between music and language. This researcher will attempt to integrate the insights from both research traditions introduced above, the theoretical-linguistic tradition and the developmental-experimental studies, and combine this with recent insights from the field of music cognition (cf. Honing 2011).

The interest in the relationship between music and language is a long-standing one. While Lerdahl & Jackendoff (1983) built mostly on insights of metrical phonology of the time, more recent studies (Pesetsky & Katz 2009) draw attention to the parallels with current minimalist syntactic theory (Chomsky 1995) rather than phonology. However, if a large part of music is indeed preverbal (Honing 2010, Trehub 2003), does this also mean that syntactic or phonological operations and structures used in linguistics are preverbal as well? The project will inquire to what extent the results of both research traditions are complementary and where substantial gaps remain. A second question addressed in the Postdoc project is that of the intersection between music and language: what exactly are the core knowledge systems that are shared by music and language, and why are exactly these mechanisms shared rather than others?

So far, the evidence for these conjectures has remained inconclusive. However, there are good reasons to believe that the search for shared processes and resources should continue. The strongest arguments derive from evolutionary considerations on the rather large set of cognitive functions that appear to be unique for humans. This suggests, for instance, that language and music will share properties with animal cognition (Fitch 2006), but at the same time that their human-specific features derive from a single common source (Hauser & McDermott 2003).

On the other hand, there are also compelling reasons to consider music and language as two distinct cognitive systems. Recent findings in the neuroscience of music suggest that music is likely a cognitively unique and evolutionary distinct faculty (Peretz & Colheart 2003). We will refer to this position as the modularity-hypothesis. There is also considerable neurological evidence suggesting a dissociation between music and language functions (e.g., patients that, because of neurological damage, suffer from amusia while maintaining their ability to recognize words, and vice versa; cf. Peretz & Colheart 2003). This position can be contrasted with the resource-sharing hypothesis that suggests music and language share processing mechanisms, especially those of a syntactic nature, and that they are just distinct in terms of the lexicon used (Patel 2008). In the proposed research, we aim to identify what is shared and what is special about music and language.

A clear candidate for a shared mechanism is syntactic processing, which is well attested in both language and music (Lerdahl & Jackendoff 1983; Patel 2008). The aim of the current project is to investigate to what extent the evidence is converging, combining insights from the research traditions mentioned in the beginning of this subproject.

A clear candidate for a special mechanism – a function that seems music-specific – is beat-induction, which seems to be limited to music and apparently inexistent in natural language. Picking up this regularity in music allows us to dance and make music together and is therefore considered a fundamental cognitive mechanism that might have contributed to the origins of music (Honing 2012; Winkler et al. 2009). Interestingly, Patel (2008:404) suggests that beat-induction is an exception to his resource-sharing hypothesis, and a realistic candidate for a cognitive ability that is specific for music.

PhD project (Joey Weidema): Relative pitch in music and language

For the implementation of this subproject, see Joey Weidema's page.

The PhD project addresses the cognitive mechanism of relative pitch and how this human skill can throw light on the relation between music and language and musical ability. Just like beat-induction (see § 4.2), relative pitch has been argued to be a fundamental musical skill that is species-specific and domain-specific (McDermott & Hauser 2005; Peretz & Coltheart 2003).

With regard to pitch perception, a significant amount of information is encoded in the contour patterns (i.e. rises and falls) of the pitch of acoustic signals, both in speech and music. For example, humans can easily recognize sentence types (e.g., statement, question, warning) on the basis of pitch contour alone in the absence of other information (Ladefoged 1982). The frequency transpositions of a melody are readily recognized by adult and infant listeners alike as the ‘same’, and are perceived as structural equivalents of the original melody (Trehub & Hannon 2006). Although human listeners can remember the exact musical intervals of familiar melodies, they appear to remember only the melodic contour of less familiar or novel stimuli (Dowling 1978). Unlike humans, who attend primarily to the relationships between sound elements, animals more heavily weight the absolute frequency of sound elements in their perceptual decisions and appear to be less sensitive to relative pitch changes (Yin et al. 2010).

Developmental psychologists have shown that the aptitude for both absolute and relative pitch is present in all babies. However, by the time they are a few months old, a hierarchy in these abilities emerges, and babies gradually listen more to the relative aspects of a melody than to the absolute, actual pitch of the notes (Trehub & Hannon 2006). Relative pitch outclasses absolute hearing, as it were.

Moreover, initial experimental evidence suggests that animals have no relative pitch, only absolute pitch (Yin et al. 2010). Research on rhesus monkeys showed that they only judged melodies as similar if they heard them at exactly the same pitch or if they were played at one or more octaves higher or lower than before (Wright et al. 2000). A melody that was played only a few tones higher or lower was just dissimilar. Songbirds, too, only seem primarily attentive to absolute pitch. For them as well, a melody sung some semitones higher or lower represents a different melody (Kass et al. 1999).

Apart from the fact that relative pitch enables us to recognize melodies without being influenced by their absolute pitch, this uniquely human skill is extremely helpful in recognizing many other melodic variants. As with beat induction, a more abstract way of listening is required. Thanks to relative pitch, humans are not only able to recognize two melodies as the same tune, but they can also identify one melody as a variant of another. How humans achieve this is still unclear.

The questions addressed in this PhD project are the following:

- What is the evidence for relative pitch as an innate or at least a

spontaneously developing skill? - Is relative pitch shared with language, or are two different

pitch perception systems involved for music and language? - What is the role of relative pitch in cultures with a tonal language? How

does the semantics marked by pitch interact with the constraints of music? - Is there are relation between relative pitch and the core knowledge systems

of number or geometry? If so, what is its nature?

These questions aim at determining whether relative pitch perception is modular or shared, and in that sense similar to the Postdoc project. In addition to analyzing and interpreting the available evidence for either position, the PhD project will focus on the interaction between congenital amusia (or tone deafness; being unable to detect an out-of-tune note in a melody) and tone language processing. Some recent studies suggest modularity, with limited transfer from one domain to the other (Liu et al. 2010; Nguyen et al. 2009).

Finally, some evidence suggests that amusics have problems with subtle speech prosody, but no difficulty with ‘natural’ sentence stimuli (Patel et al. 2008). Interestingly, amusia also seems to be associated with deficits in spatial processing (Douglas & Bilkey 2007). Hence it could be that amusia is in fact a failure to implement a spatial representation of relative pitch (Williamson, Cocchini & Stewart 2011), linking the project on pitch to the core knowledge system of geometry.