Research project

Smarter edits: Post-editing translations with LLM suggestions

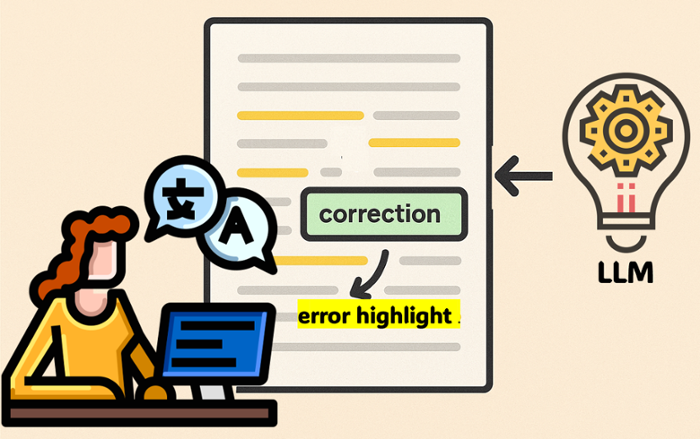

Can smart suggestions help translators work better with machine translation? As machine translation becomes the norm, professional translators are asked to correct its output; a process called post-editing. But how can we make this easier and more effective?

- Duration

- 2025 - 2026

- Contact

- Alina Karakanta

- Funding

- European Association for Machine Translation (EAMT)

This project explores whether showing translators error highlights and translation corrections generated by large language models can improve their speed, accuracy, and confidence. The goal is to make translation tools smarter, more helpful, and better suited to human expertise.

Generative large language models (LLMs) have demonstrated remarkable proficiency not only for machine translation (MT) but across several translation-related tasks, among which assessing MT quality, highlighting potential errors and suggesting translation corrections. Despite the growing interest in explainable MT evaluation, the usefulness of such features in helping translators post-edit machine-translated texts has not been explored. Could error highlights and correction suggestions enhance productivity by helping translators post-edit machine-translated texts more quickly and efficiently? As MT outputs improve in quality and errors become increasingly difficult to spot, could such features assist translators in detecting errors, resulting in higher-quality translations? What is the optimal amount of information to best support translators without disrupting the editing process? Given the fluency of explanations, how does translators’ confidence in themselves versus their confidence in AI evolve and what is the impact on their decision-making process? Does translation expertise play a role in their decisions?

This project explores the above questions by incorporating automatic error annotations and correction suggestions in post-editing workflows. To this aim, two studies with professional and student translators are conducted in the language pair English→Dutch. Translators are asked to post-edit machine translated texts in three conditions: 1) post-editing without suggestions, 2) post-editing with error span annotations, 3) post-editing with translation corrections. We collect several types of information to understand how translators work in these conditions. This includes:

- How long it takes them to complete each post-editing task

- How much they type, measured by the number of keystrokes

- How good the final translation is, based on expert assessment

- Whether they catch important errors in the machine translation

- Their opinions, through questionnaires and interviews about how helpful and accurate they found the annotations and suggestions

- How confident they felt in their translation choices while working on the task

This project helps us understand how to best support professional translators when they work with machine-generated texts. By improving how potential errors suggestions are presented and suggesting corrections, we can make translation work faster and less tiring, reduce the chance of serious errors being missed, and improve the quality and reliability of translated content in areas like healthcare, law, and public information.

Alina Karakanta, Fleur van Tellingen, Gautam Ranka. 2025. Improving error span prediction for human post-editing. In Proceedings of the 35th Meeting of Computational Linguistics in The Netherlands (CLIN 35). Leuven, 12 September 2025. https://www.ccl.kuleuven.be/CLIN35/posters.html