Robots and our data: opportunity or danger?

Self-driving cars, surgery robots, and stock market algorithms: the use of robots and Artificial Intelligence (AI) is rapidly increasing. What are the opportunities for this development, and what the dangers? The Honours Class ‘Robot Law: Regulating Robot and AI Technologies’ prepares students for the future.

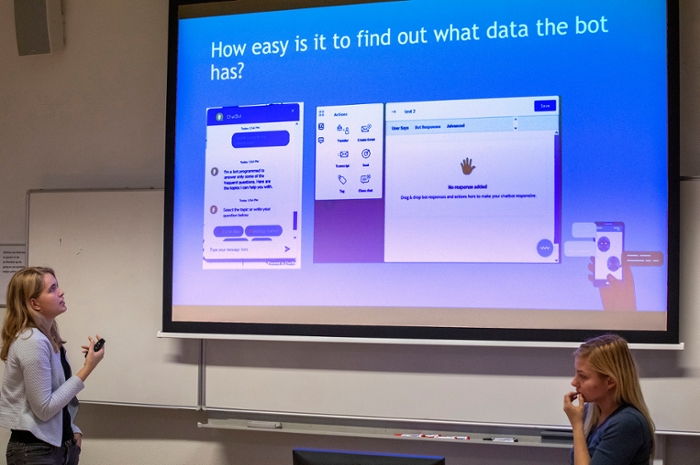

In a classroom of the Kamerlingh Onnes Building, students take their seats in a so-called U-formation. 'I want students to face each other, so there is room for discussion and no one feels excluded,' says dr. Eduard Fosch-Villaronga, instructor of the course and researcher at the eLaw Center for Law and Digital Technologies. Today, the Honours participants have prepared group presentations. The topic: do robot and AI technologies comply with the EU’s General Data Protection Regulation (GDPR)?

Vague, delayed or incomplete

'The GDPR works well in theory, but not in practice,' is the conclusion of the first group, which investigated chatbots in relation to the right of access. This is the right to know what data a company has gathered from you, for what purpose, and with whom it has been shared. The students found that obtaining this kind of information is harder than it should be. For example, on the websites of a big international bank and a Dutch webshop, a request for access can be submitted, but not via the chatbot itself.

When access is granted, other problems arise. Information comes with delay –a response may take up to 30 days – or is incomplete – the webshop does not tell you what data their bot has collected, only that is has done so. Chatbots that are transparent, can still violate the GDPR. Another webshop's chatbot, for instance, gathers most data automatically, instead of consensually. On top of that, the company is, as the students put it, 'vague' about its sharing of data with others.

Lose control

But it is precisely this sharing of data between companies that poses a threat to the right to be forgotten. That is the viewpoint of a second team, whose members investigated deepfake technology, the manipulation of images in a hyper realistic way. An infamous example of deepfake is the Chinese app Zao. This face-swap application claims the right to use any image created on it for free, as well as to transfer this authorization to third parties without further permission. Third parties can share data with others, too, making it practically impossible to track data, let alone erase it: 'You basically lose control over your face', a presenter concludes with a shocked tone in her voice.

Give something back

Four presentations, three hours, and many GDPR violations later, the instructor thanks his students for their 'brilliant work'. Tired but satisfied, the Honours participants head home. Fosch-Villaronga hopes they enjoyed learning from each other today: 'That’s what Honours is about: not excelling at complying requirements, but enjoying the experience of learning itself.' To achieve that, the scholar uses many different learning activities, such as discussions, movie nights, and a visit to AiTech in Delft: 'We all have different types of learning and expressing ourselves.'

Fosch-Villaronga is grateful for the opportunity to teach: 'Where I am from, there were no courses on data protection, robots, or digital technologies. Along my way, however, I found professors that shed light on what seemed a very uncertain future.' Now he wants to give something back: 'I want to help future generations learn about developments that are very new and have an impact on our lives.'

Text: Michiel Knoester

Photography: André van Haasteren

Email the editors