Ethics: how selfless should a self-driving car be

Intelligent machines are going to make ethical decisions too. Should a self-driving car be allowed to slam into pedestrians to save its passengers from a head-on collision? Should a negotiation app be able to detect stress in your opponent’s voice? And who makes these decisions: the user, the system’s designer or the law?

'I chose not to build this feature into the app'

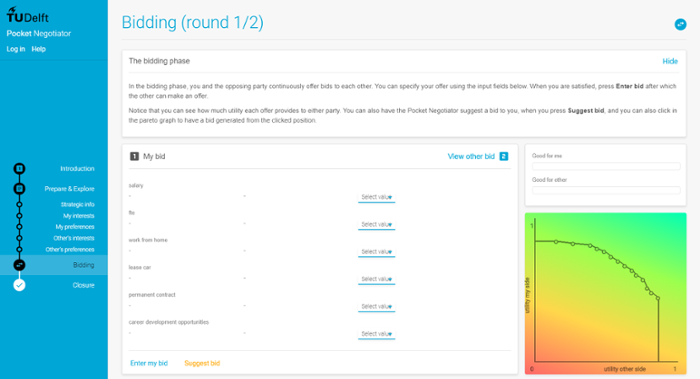

AI often depends on big data: a neural network needs millions of sample images or words of text to learn an ‘intelligent’ task, such as recognising people. However, Catholijn Jonker, Professor of Explainable Artificial Intelligence, develops AI that depends on small data. For instance: the Pocket Negotiator, a prototype app that helps you negotiate the best price for a house or the best benefits package for a new job.

This AI system starts off knowing little about you or your opponent, and the little that it does know is uncertain. Jonker: ‘You don’t know what the other person knows, nor do they want you to find out.’ Only when the bidding process gets underway, does the app get solid information from the opponent, namely, the bids that they make. The system has to use this information to discover the intentions of your human opponent. This is why Jonker, who also works on AI at Delft University of Technology, works part time in Leiden: ‘You can involve psychologists who study negotiation in your research.’

AI software engineers can’t avoid ethical questions. Such as: what is honest negotiation? A smartphone can detect live from someone’s voice if they are excited by an offer. Similarly, the camera can detect stress in the opponent’s facial expresssions. Jonker: ‘I chose not to build that into the app.’ The Pocket Negotiator only uses information willingly provided by the opponent. This is the kind of dilemma that Jonker addresses when she gives public lectures or talks to politicians.

Current AI systems don't give an acceptable explanation for their decisions

Francien Dechesne, Assistant Professor in Law & Digital Technology, is also trying to get the ethical dilemmas of AI on the agenda. She teaches Computing Ethics to computer science students and advises banks and judges. ‘Seemingly objective choices made by a software engineer have consequences for society,’ she stresses. In the US, algorithms are now being used to determine the risk of recidivism. This influences the judge’s sentencing. ‘But what exactly is recidivism? Is it committing another crime or being arrested again by the police? Concepts that are meaningful in a societal context are forcibly translated into measurable parameters that can be stuffed into an algorithm.’

An algorithm based on a neural network does not apply a set of rules installed by the software engineer; it discovers relevant patterns in training data all by itself: in this case, data about criminals and recidivism. Dechesne: ‘You can’t hold the system accountable and ask why it gave that person that score.’

And this problem doesn’t just concern criminals. Such algorithms can also be used to decide whether you get a mortgage or if your tax return will subjected to extra checks.

For both Jonker and Duchesne it is still very unclear who bears ultimate responsibility for the decisions – and bias – of these systems. The General Data Protection Regulation (GDPR), which came into effect in May 2018, states that people are entitled to meaningful information about how these kinds of decisions are reached. But how can this be enforced? Dechesne: ‘The Dutch Data Protection Authority should be able to tell a company that if it doesn’t sufficiently understand its own AI system, it won’t be allowed to use it for this purpose. But they don’t have the resources to do that at present.’