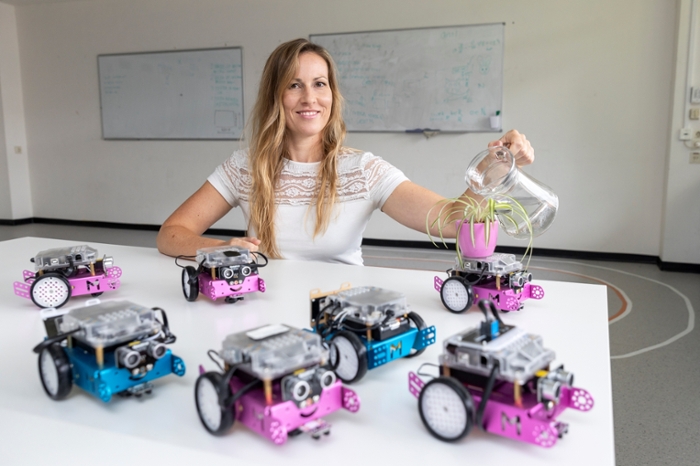

Tessa Verhoef: 'An algorithm still has a lot to learn from human interaction'

If an algorithm has to learn to understand language, simply having a lot of data doesn’t help much. Like us, a computer has to learn the language in interaction with others. Tessa Verhoef is fascinated by how this interaction works.

Verhoef started the Artificial Intelligence programme in Groningen in 2003 along with thirty other students. She was a front-runner; later the student numbers skyrocketed. ‘When I was studying, I became fascinated by language evolution and cultural evolution: how language and stories change because they’re passed on from people to people.’

Verhoef conducted experiments where people who had to learn a new kind of language and pass it on to others, without using words, but by communicating spontaneously using a recorder. She uses the insights she gains from these experiments to help AI systems with natural language processing. This paves the way for machines to communicate with one another using a more natural communication system. ‘By replicating these evolution processes with machines, we can also learn a lot about how exactly natural language processing works in human interaction. Not only that, if this kind of interaction can take place between man and machine, we can carry out joint tasks more effectively. It’s this development process that I want to research.’

No shortage of test volunteers

Verhoef has an unconventional way of working. She does a lot of her research in museums and at festivals. ‘It’s great fun, and there are always plenty of volunteers who want to take part. At Lowlands she got people to pass on stories to one another. I wanted to find out which elements of the story got lost and which gained extra emphasis when a story was passed on a few times. That’s something it’s important to know, for example, if you want to teach an algorithm to detect misinformation. Elements with a shock factor, some kind of mystery or intrigue have a better chance of surviving and are often exaggerated, or new information is even added.’

Dangerous bogus messages

AI systems can already detect sentiments on social media, for example. Verhoef hopes that she can teach them to recognise fake news. ‘There are a lot of dangerous bogus messages about corona, for instance. Information from ‘pass the message’ games can help train AI systems to recognise potentially false messages because of the presence of elements that are susceptible to alteration and exaggeration.’

Oddly enough, a useful AI system is not Verhoef’s main aim. What drives her is a fundamental interest in social interaction.’ What I do is learning by building. We put all the knowledge we have into an AI system and if we see that it doesn’t work, we know that we still don’t fully understand it.’

Understanding language: the holy grail

In the AI world, a computer that can understand language is the holy grail. That grail is still a long way off: Google and Siri don’t understand anything and you can’t have a conversation with either of them. Verhoef: 'Robots are sometimes used as social buddies and they certainly have a lot of potential to become buddies. But programming alone isn’t enough. Language is heavily dependent on social interaction: you talk to your mother differently from how you talk to a colleague. A machine needs social interaction with people to be able to hold a personal conversation.’ Artificial intelligence is shifting increasingly towards hybrid intelligence: the intelligence of man and machine together.

Text: Rianne Lindhout

Photo: Patricia Nauta